Can you share your StorageCluster Spec ?

I am using this px-spec file to create it: https://gist.github.com/satyamodi/e8b4e41a2a2133494b211d78a74f41cf

So your not installing from Operator Hub ?

I am using a terraform script to install the cluster and portworx. I am installing operator using below yaml file from cli:

apiVersion: operators.coreos.com/v1

kind: OperatorGroup

metadata:

name: kube-system-operatorgroup

namespace: kube-system

spec:

serviceAccount:

metadata:

creationTimestamp: null

targetNamespaces:

- kube-system

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

generation: 1

name: portworx-certified

namespace: kube-system

spec:

channel: stable

installPlanApproval: Automatic

name: portworx-certified

source: certified-operators

sourceNamespace: openshift-marketplace

startingCSV: portworx-operator.v1.4.0

Your mixing the installs, you can either use Operator or Spec (DaemonSet) method.

Can you uninstall and try again :

- Uninstall

curl -fsL https://install.portworx.com/px-wipe | bash - Uninstall the Portworx Operator.

Now once everything is cleaned up just apply your spec file on installation.

Hi Sanjay,

it’s not about mixing the install, it doesn’t work on OCP 4.5 cluster. We have released terraform and AWS Quickstart with OCP 4.3 with Portworx earlier. Even if i am doing everything manually, the portworx is not coming up on an 4.5 cluster. We have this issue with AWS Quickstart as well, portworx work with ocp 4.3 but not with 4.5.

Can you pls try it from your side the installation on an 4.5 cluster.

Can you find the entry in log file starting with “Availability zone misma …” Can you send me this line completely, this looks that Volume is created in different zone and instance is trying to claim that which might be in different zone

i have copied the lines hidden from the screen: https://gist.github.com/satyamodi/1421e456f68e008f60f54b7b29354522

This is the Error :

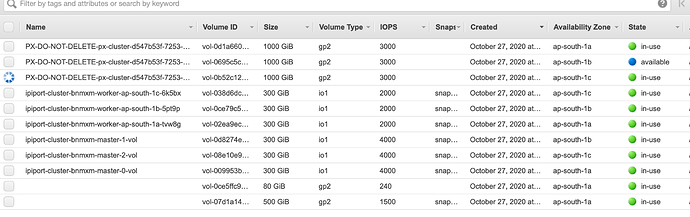

Availability zone mismatch for volume vol-0e65d466e1463c2db: (Volume Zone: ap-south-1b Instance Zone: ap-south-1a)"

Can you check in which Zone your instance is launched ? Does your worker nodes distributions are properly done in A/B/C from the above instance running is running in Zone A and Volume is Created in Zone B.

It looks if the Zone A/B/C distribution is proper then there is high chance of previous uninstall would not be cleaned up properly whiich would have left the Orphan Volumes. If we consider this case then you can simply uninstall the portworx, and delete the Volumes from AWS console.

And now install portworx again. Let me know how it goes. or I will open a remote session just to look at this.

I have created a new cluster. Nodes and volumes are distributed properly in all three zones. Attaching the screenshots.

Still the install is failing on new Cluster ?

yes, so every time i am creating a new cluster. Earlier volumes were not coming up but after you made some changes to your repo, it’s coming but de-attaching instantly. We had similar issue of de-attaching volumes with ocp 4.3 long time back but after giving access to the below ports on your team’s suggestion it was resolved.

aws ec2 authorize-security-group-ingress --group-id $WORKER_GROUP_ID --protocol tcp --port 17001-17020 --source-group $MASTER_GROUP_ID

aws ec2 authorize-security-group-ingress --group-id $WORKER_GROUP_ID --protocol tcp --port 17001-17020 --source-group $WORKER_GROUP_ID

aws ec2 authorize-security-group-ingress --group-id $WORKER_GROUP_ID --protocol tcp --port 111 --source-group $MASTER_GROUP_ID

aws ec2 authorize-security-group-ingress --group-id $WORKER_GROUP_ID --protocol tcp --port 111 --source-group $WORKER_GROUP_ID

aws ec2 authorize-security-group-ingress --group-id $WORKER_GROUP_ID --protocol tcp --port 2049 --source-group $MASTER_GROUP_ID

aws ec2 authorize-security-group-ingress --group-id $WORKER_GROUP_ID --protocol tcp --port 2049 --source-group $WORKER_GROUP_ID

aws ec2 authorize-security-group-ingress --group-id $WORKER_GROUP_ID --protocol tcp --port 20048 --source-group $MASTER_GROUP_ID

aws ec2 authorize-security-group-ingress --group-id $WORKER_GROUP_ID --protocol tcp --port 20048 --source-group $WORKER_GROUP_ID

aws ec2 authorize-security-group-ingress --group-id $WORKER_GROUP_ID --protocol tcp --port 9001-9022 --source-group $MASTER_GROUP_ID

aws ec2 authorize-security-group-ingress --group-id $WORKER_GROUP_ID --protocol tcp --port 9001-9022 --source-group $WORKER_GROUP_ID

Earlier problem was with Kernel version detection, it was failing there and not at Volume attaching/detaching. Do you have some time in next 30-40 mins to get on call and i will take a look.

Sure we can connect.

Can you join https://us02web.zoom.us/j/85195402362 ?

I joined the zoom call.

Try again to join the same link

What was the resolution for this please? I’m having exact same issue.

This is due to RHCOS updating kernel much more frequently than portworx version, as cloudpak4data standardizes on a fixed (usually trailing/older) version of Portworx (and a given PX version only has support for kernels available at the time of its release).

We have improved handling in a newer version of Portworx (2.6.1.5), but for now we have been using a work-around script to get past this issue.

Here are the contents (name this : el8-fslib-fix.sh then chmod+x it and execute on the problem node)

#!/bin/bash

CURKERNEL=$(uname -r)

ARCH=$(uname -m)

EL8_FSLIBS_NM=btrfs.all.el8.kos

EL8_FSLIBS_ARCHIVE=${EL8_FSLIBS_NM}.xz

EL8_FSLIBS_MANIFEST=${EL8_FSLIBS_NM}.manifest

FSLIB_DIR=/opt/pwx/oci/rootfs/pxlib_data/px-fslibs

[ ! -d ${FSLIB_DIR} ] && echo "Unable to find 'px-fslibs' directory" && exit 1

[ ! -e ${FSLIB_DIR}/${EL8_FSLIBS_ARCHIVE} ] && echo "Unable to find 'px-fslibs' archive" && exit 1

cd ${FSLIB_DIR}

if [ -e ${EL8_FSLIBS_MANIFEST} ]; then

egrep -q "${ARCH}/${CURKERNEL}/" ${EL8_FSLIBS_MANIFEST}

[ $? -eq 0 ] && echo "No modification needed 'px-fslibs' exist for this kernel." && exit 0

fi

# Need to update archive,

mkdir -p Unpack && cd Unpack

cp ../${EL8_FSLIBS_NM}.* .

tar xJf ./${EL8_FSLIBS_ARCHIVE}

cd x86_64/

KVER=$(echo ${CURKERNEL} | awk -F- '{print $1}')

PATCH=$(echo ${CURKERNEL} | awk -F- '{print $2}'| awk -F. '{print $1}')

KERN=${KVER}-${PATCH}

NEXT_FSLIB=$(egrep ${KERN}.[0-9] ../${EL8_FSLIBS_MANIFEST} | awk -F'/' '{print $2}' | sort -r | head -n 1)

[ -z "${NEXT_FSLIB}" ] && echo "Unable to find next available 'px-fslibs' for this kernel." && exit 1

cp -a ${NEXT_FSLIB} ${CURKERNEL}

cd ../

XZ_OPTS=-9 tar -Jhcf btrfs.new.xz x86_64

[ $? -ne 0 ] && echo "Failed to create updated 'px-fslibs' archive." && exit 1

tar -tJf ./btrfs.new.xz | egrep btrfs.ko$ > btrfs.new.manifest

[ $? -ne 0 ] && echo "Failed to create updated 'px-fslibs' manifest." && exit 1

cp ./btrfs.new.manifest ../${EL8_FSLIBS_MANIFEST}

cp ./btrfs.new.xz ../${EL8_FSLIBS_ARCHIVE}

cd

echo "Done updating 'px-fslibs' archive.."

#systemctl start portworx; journalctl -lfu portworx\*

# copy new files to other nodes

# /opt/pwx/oci/rootfs/pxlib_data/px-fslibs/btrfs.all.el8.kos.manifest

# /opt/pwx/oci/rootfs/pxlib_data/px-fslibs/btrfs.all.el8.kos.xz

This would need to be run on one node, portworx restarted via systemctl (as mentioned), then for any other node, also copy the files referenced at the end to the same location and restart as well